목차

3D 데이터를 blender를 활용하여 2D로 렌더링(정확히 말하자면 3D 데이터를 다양한 각도의 카메라를 기준으로 2D로 projection하여 이미지로 저장)하려는데, 데이터 양이 많아 GUI로는 작업이 불가능했다. 따라서 파이썬 코드로 3D를 2D로 렌더링 하는 방법을 기록해둔다.

코드는 아래 깃허브를 참조했다.

https://github.com/nv-tlabs/GET3D/tree/master/render_shapenet_data

GitHub - nv-tlabs/GET3D

Contribute to nv-tlabs/GET3D development by creating an account on GitHub.

github.com

Blender 설치

작업 환경은 Ubuntu 20.04이며, 도커 컨테이너에서 blender를 설치하여 작업했다.

우선 아래 링크에서 blender를 설치한다. snap으로 blender를 다운받을 수도 있지만, 코드를 돌리려니 permission error, read-only file system error 등이 발생해서 직접 zip파일을 다운받았다. github에서 2.90.0 버전 기준으로 코드를 짰다고 하여, 나도 'blender-2.90.0-linux64.tar.xz'를 다운받았다.

https://www.blender.org/download/previous-versions/

Previous Versions — blender.org

Your old files are safe. Every Blender release is available for download.

www.blender.org

다운받은 파일의 압축을 풀면 바로 사용이 가능하다. 단, command line에서 (스크립트로) 실행하려면, 터미널에서 다음 명령어를 입력해주어야 한다.

sudo ln -s [blender_directory]/blender /usr/local/bin/blender

그리고, 파이썬 스크립트를 통해 블렌더를 사용하려면 몇 가지 작업을 더 해주어야 한다.

우선 다음과 같이 bpy 패키지(blender python)를 설치해야 한다.

pip install bpy

그리고, 몇 가지 라이브러리를 (로컬 또는 컨테이너 환경에서) 설치해주어야 한다.

apt-get install -y libxi6 libgconf-2-4 libfontconfig1 libxrender1

cd [blender_dir]/2.90/python/bin

./python3.7m -m ensurepip

./python3.7m -m pip install numpy

Rendering with Python

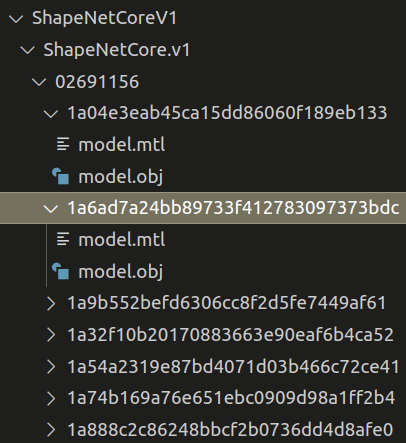

렌더링할 3D 데이터셋은 ShapeNetCoreV1로, 디렉토리 구조(일부)는 다음과 같다.

이제 깃허브 링크의 render_all.py 코드와 render_shapenet_data.py 코드를 살펴보자.

본인의 데이터셋과 필요에 따라 코드를 적절히 수정하여 사용하면 될 것이다.

render_all.py

# Copyright (c) 2022, NVIDIA CORPORATION & AFFILIATES. All rights reserved.

#

# NVIDIA CORPORATION & AFFILIATES and its licensors retain all intellectual property

# and proprietary rights in and to this software, related documentation

# and any modifications thereto. Any use, reproduction, disclosure or

# distribution of this software and related documentation without an express

# license agreement from NVIDIA CORPORATION & AFFILIATES is strictly prohibited.

import os

import argparse

parser = argparse.ArgumentParser(description='Renders given obj file by rotation a camera around it.')

parser.add_argument(

'--save_folder', type=str, default='./tmp',

help='path for saving rendered image')

parser.add_argument(

'--dataset_folder', type=str, default='./tmp',

help='path for downloaded 3d dataset folder')

parser.add_argument(

'--blender_root', type=str, default='./tmp',

help='path for blender')

args = parser.parse_args()

save_folder = args.save_folder

dataset_folder = args.dataset_folder

blender_root = args.blender_root

synset_list = [

'02958343', # Car

'03001627', # Chair

'03790512' # Motorbike

]

scale_list = [

0.9,

0.7,

0.9

]

for synset, obj_scale in zip(synset_list, scale_list):

file_list = sorted(os.listdir(os.path.join(dataset_folder, synset)))

for idx, file in enumerate(file_list):

render_cmd = '%s -b -P render_shapenet.py -- --output %s %s --scale %f --views 24 --resolution 1024 >> tmp.out' % (

blender_root, save_folder, os.path.join(dataset_folder, synset, file, 'model.obj'), obj_scale

)

os.system(render_cmd)

이 코드는 커맨드라인(터미널)에서 'save_folder'(저장할 폴더명), 'dataset_folder'(렌더링할 데이터셋 폴더명), 'blender_root'(블렌더를 다운받은 곳)를 입력받고, 각 object별로 해당하는 scale에 따라 렌더링 커맨드를 입력해주는 파이썬 파일이다.

render_shapenet.py

# Copyright (c) 2022, NVIDIA CORPORATION & AFFILIATES. All rights reserved.

#

# NVIDIA CORPORATION & AFFILIATES and its licensors retain all intellectual property

# and proprietary rights in and to this software, related documentation

# and any modifications thereto. Any use, reproduction, disclosure or

# distribution of this software and related documentation without an express

# license agreement from NVIDIA CORPORATION & AFFILIATES is strictly prohibited.

import argparse, sys, os, math, re

import bpy

from mathutils import Vector, Matrix

import numpy as np

import json

parser = argparse.ArgumentParser(description='Renders given obj file by rotation a camera around it.')

parser.add_argument(

'--views', type=int, default=24,

help='number of views to be rendered')

parser.add_argument(

'obj', type=str,

help='Path to the obj file to be rendered.')

parser.add_argument(

'--output_folder', type=str, default='/tmp',

help='The path the output will be dumped to.')

parser.add_argument(

'--scale', type=float, default=1,

help='Scaling factor applied to model. Depends on size of mesh.')

parser.add_argument(

'--format', type=str, default='PNG',

help='Format of files generated. Either PNG or OPEN_EXR')

parser.add_argument(

'--resolution', type=int, default=512,

help='Resolution of the images.')

parser.add_argument(

'--engine', type=str, default='CYCLES',

help='Blender internal engine for rendering. E.g. CYCLES, BLENDER_EEVEE, ...')

argv = sys.argv[sys.argv.index("--") + 1:]

args = parser.parse_args(argv)

# Set up rendering

context = bpy.context

scene = bpy.context.scene

render = bpy.context.scene.render

render.engine = args.engine

render.image_settings.color_mode = 'RGBA' # ('RGB', 'RGBA', ...)

render.image_settings.file_format = args.format # ('PNG', 'OPEN_EXR', 'JPEG, ...)

render.resolution_x = args.resolution

render.resolution_y = args.resolution

render.resolution_percentage = 100

bpy.context.scene.cycles.filter_width = 0.01

bpy.context.scene.render.film_transparent = True

bpy.context.scene.cycles.device = 'GPU'

bpy.context.scene.cycles.diffuse_bounces = 1

bpy.context.scene.cycles.glossy_bounces = 1

bpy.context.scene.cycles.transparent_max_bounces = 3

bpy.context.scene.cycles.transmission_bounces = 3

bpy.context.scene.cycles.samples = 32

bpy.context.scene.cycles.use_denoising = True

def enable_cuda_devices():

prefs = bpy.context.preferences

cprefs = prefs.addons['cycles'].preferences

cprefs.get_devices()

# Attempt to set GPU device types if available

for compute_device_type in ('CUDA', 'OPENCL', 'NONE'):

try:

cprefs.compute_device_type = compute_device_type

print("Compute device selected: {0}".format(compute_device_type))

break

except TypeError:

pass

# Any CUDA/OPENCL devices?

acceleratedTypes = ['CUDA', 'OPENCL']

accelerated = any(device.type in acceleratedTypes for device in cprefs.devices)

print('Accelerated render = {0}'.format(accelerated))

# If we have CUDA/OPENCL devices, enable only them, otherwise enable

# all devices (assumed to be CPU)

print(cprefs.devices)

for device in cprefs.devices:

device.use = not accelerated or device.type in acceleratedTypes

print('Device enabled ({type}) = {enabled}'.format(type=device.type, enabled=device.use))

return accelerated

enable_cuda_devices()

context.active_object.select_set(True)

bpy.ops.object.delete()

# Import textured mesh

bpy.ops.object.select_all(action='DESELECT')

def bounds(obj, local=False):

local_coords = obj.bound_box[:]

om = obj.matrix_world

if not local:

worldify = lambda p: om @ Vector(p[:])

coords = [worldify(p).to_tuple() for p in local_coords]

else:

coords = [p[:] for p in local_coords]

rotated = zip(*coords[::-1])

push_axis = []

for (axis, _list) in zip('xyz', rotated):

info = lambda: None

info.max = max(_list)

info.min = min(_list)

info.distance = info.max - info.min

push_axis.append(info)

import collections

originals = dict(zip(['x', 'y', 'z'], push_axis))

o_details = collections.namedtuple('object_details', 'x y z')

return o_details(**originals)

# function from https://github.com/panmari/stanford-shapenet-renderer/blob/master/render_blender.py

def get_3x4_RT_matrix_from_blender(cam):

# bcam stands for blender camera

# R_bcam2cv = Matrix(

# ((1, 0, 0),

# (0, 1, 0),

# (0, 0, 1)))

# Transpose since the rotation is object rotation,

# and we want coordinate rotation

# R_world2bcam = cam.rotation_euler.to_matrix().transposed()

# T_world2bcam = -1*R_world2bcam @ location

#

# Use matrix_world instead to account for all constraints

location, rotation = cam.matrix_world.decompose()[0:2]

R_world2bcam = rotation.to_matrix().transposed()

# Convert camera location to translation vector used in coordinate changes

# T_world2bcam = -1*R_world2bcam @ cam.location

# Use location from matrix_world to account for constraints:

T_world2bcam = -1*R_world2bcam @ location

# # Build the coordinate transform matrix from world to computer vision camera

# R_world2cv = R_bcam2cv@R_world2bcam

# T_world2cv = R_bcam2cv@T_world2bcam

# put into 3x4 matrix

RT = Matrix((

R_world2bcam[0][:] + (T_world2bcam[0],),

R_world2bcam[1][:] + (T_world2bcam[1],),

R_world2bcam[2][:] + (T_world2bcam[2],)

))

return RT

imported_object = bpy.ops.import_scene.obj(filepath=args.obj, use_edges=False, use_smooth_groups=False, split_mode='OFF')

for this_obj in bpy.data.objects:

if this_obj.type == "MESH":

this_obj.select_set(True)

bpy.context.view_layer.objects.active = this_obj

bpy.ops.object.mode_set(mode='EDIT')

bpy.ops.mesh.split_normals()

bpy.ops.object.mode_set(mode='OBJECT')

print(len(bpy.context.selected_objects))

obj = bpy.context.selected_objects[0]

context.view_layer.objects.active = obj

mesh_obj = obj

scale = args.scale

factor = max(mesh_obj.dimensions[0], mesh_obj.dimensions[1], mesh_obj.dimensions[2]) / scale

print('size of object:')

print(mesh_obj.dimensions)

print(factor)

object_details = bounds(mesh_obj)

print(

object_details.x.min, object_details.x.max,

object_details.y.min, object_details.y.max,

object_details.z.min, object_details.z.max,

)

print(bounds(mesh_obj))

mesh_obj.scale[0] /= factor

mesh_obj.scale[1] /= factor

mesh_obj.scale[2] /= factor

bpy.ops.object.transform_apply(scale=True)

bpy.ops.object.light_add(type='AREA')

light2 = bpy.data.lights['Area']

light2.energy = 30000

bpy.data.objects['Area'].location[2] = 0.5

bpy.data.objects['Area'].scale[0] = 100

bpy.data.objects['Area'].scale[1] = 100

bpy.data.objects['Area'].scale[2] = 100

# Place camera

cam = scene.objects['Camera']

cam.location = (0, 1.2, 0) # radius equals to 1

cam.data.lens = 35

cam.data.sensor_width = 32

cam_constraint = cam.constraints.new(type='TRACK_TO')

cam_constraint.track_axis = 'TRACK_NEGATIVE_Z'

cam_constraint.up_axis = 'UP_Y'

cam_empty = bpy.data.objects.new("Empty", None)

cam_empty.location = (0, 0, 0)

cam.parent = cam_empty

scene.collection.objects.link(cam_empty)

context.view_layer.objects.active = cam_empty

cam_constraint.target = cam_empty

stepsize = 360.0 / args.views

rotation_mode = 'XYZ'

model_identifier = os.path.split(os.path.split(args.obj)[0])[1]

synset_idx = args.obj.split('/')[-3]

img_follder = os.path.join(os.path.abspath(args.output_folder), 'img', synset_idx, model_identifier)

camera_follder = os.path.join(os.path.abspath(args.output_folder), 'camera', synset_idx, model_identifier)

os.makedirs(img_follder, exist_ok=True)

os.makedirs(camera_follder, exist_ok=True)

rotation_angle_list = np.random.rand(args.views)

elevation_angle_list = np.random.rand(args.views)

rotation_angle_list = rotation_angle_list * 360

elevation_angle_list = elevation_angle_list * 30

np.save(os.path.join(camera_follder, 'rotation'), rotation_angle_list)

np.save(os.path.join(camera_follder, 'elevation'), elevation_angle_list)

# creation of the transform.json

to_export = {

'camera_angle_x': bpy.data.cameras[0].angle_x,

"aabb": [[-scale/2,-scale/2,-scale/2],

[scale/2,scale/2,scale/2]]

}

frames = []

for i in range(0, args.views):

cam_empty.rotation_euler[2] = math.radians(rotation_angle_list[i])

cam_empty.rotation_euler[0] = math.radians(elevation_angle_list[i])

print("Rotation {}, {}".format((stepsize * i), math.radians(stepsize * i)))

render_file_path = os.path.join(img_follder, '%03d.png' % (i))

scene.render.filepath = render_file_path

bpy.ops.render.render(write_still=True)

# might not need it, but just in case cam is not updated correctly

bpy.context.view_layer.update()

rt = get_3x4_RT_matrix_from_blender(cam)

pos, rt, scale = cam.matrix_world.decompose()

rt = rt.to_matrix()

matrix = []

for ii in range(3):

a = []

for jj in range(3):

a.append(rt[ii][jj])

a.append(pos[ii])

matrix.append(a)

matrix.append([0,0,0,1])

print(matrix)

to_add = {\

"file_path":f'{str(i).zfill(3)}.png',

"transform_matrix":matrix

}

frames.append(to_add)

to_export['frames'] = frames

with open(f'{img_follder}/transforms.json', 'w') as f:

json.dump(to_export, f,indent=4)

렌더링을 위한 코드이다. 입력 arguments는 아래와 같다.

- views : 렌더링할 view 개수

- obj : 렌더링할 obj 파일 경로

- output_folder : 결과 저장할 폴더

- scale : 모델에 적용할 scaling factor

- format : 생성할 이미지의 파일 포맷

- resolution : 생성할 이미지의 해상도

- engine : 렌더링에 사용할 블렌더에 내장된 엔진

위 코드를 간단히 요약하자면, 먼저 ArgumentParser로 입력 arguments들을 할당해주고, bpy 모듈을 사용하여 렌더링 관련 setup을 해준 다음, mesh를 import하여 렌더링을 해준다.

Execute Rendering

터미널에 아래 명령어로 script를 실행한다.

python render_all.py --save_folder [save_folder] --dataset_folder [dataset_folder] --blender_root [blender_root]

예를 들어, ShapeNetV1에 적용하기 위해 다음과 같이 입력했다.

python ./render_all.py save_folder ./ShapeNetCoreV1/rendered --dataset_floder ./ShapeNetCoreV1/ShapeNetCore.v1 --blender_root ~/dev/apps/blender-2.90.0-linux64/blender

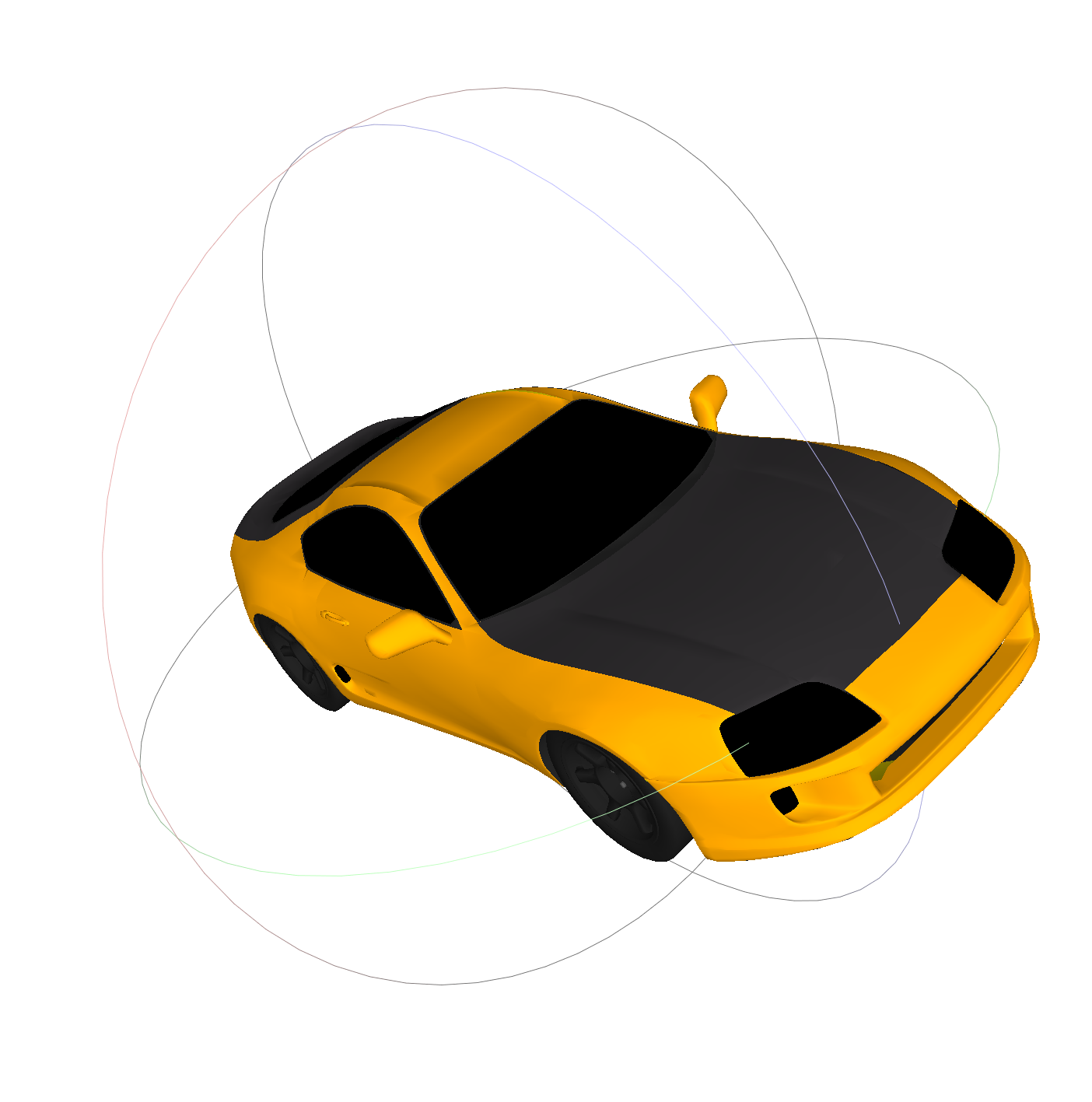

MeshLab에서 열어본 obj파일(3d 모델)과 렌더링하여 이미지로 저장한 결과는 아래와 같다. (총 24개 view)

최근댓글